|

After Width: | Height: | Size: 386 KiB |

|

Before Width: | Height: | Size: 256 KiB After Width: | Height: | Size: 256 KiB |

|

After Width: | Height: | Size: 145 KiB |

|

After Width: | Height: | Size: 1.0 MiB |

|

After Width: | Height: | Size: 1013 KiB |

|

After Width: | Height: | Size: 478 KiB |

@ -0,0 +1,441 @@ |

||||

--- |

||||

title: 'Top 7 Backend Frameworks to Use in 2024: Pro Advice' |

||||

description: 'Get expert advice on backend frameworks for 2024. Learn about the top 7 frameworks that can elevate your development process.' |

||||

authorId: fernando |

||||

excludedBySlug: '/backend/frameworks' |

||||

seo: |

||||

title: 'Top 7 Backend Frameworks to Use in 2024: Pro Advice' |

||||

description: 'Get expert advice on backend frameworks for 2024. Learn about the top 7 frameworks that can elevate your development process.' |

||||

ogImageUrl: 'https://assets.roadmap.sh/guest/top-backend-frameworks-jfpux.jpg' |

||||

isNew: true |

||||

type: 'textual' |

||||

date: 2024-09-27 |

||||

sitemap: |

||||

priority: 0.7 |

||||

changefreq: 'weekly' |

||||

tags: |

||||

- 'guide' |

||||

- 'textual-guide' |

||||

- 'guide-sitemap' |

||||

--- |

||||

|

||||

|

||||

|

||||

Choosing the right backend framework in 2024 can be crucial when you’re building web applications. While the programming language you pick is important, the backend framework you go with will help define how scalable, secure, and maintainable your application is. It’s the foundation that supports all the features your users interact with on the frontend and keeps everything running smoothly behind the scenes. |

||||

|

||||

So, it’s a decision you want to get right. |

||||

|

||||

In 2024, [backend development](https://roadmap.sh/backend) is more complex and interconnected than ever. Developers are working with APIs, microservices, cloud-native architectures, and ensuring high availability while keeping security at the forefront. It’s an era where the demand for real-time data, seamless integrations, and efficient performance is higher than ever. |

||||

|

||||

Whether you're building an enterprise-level application or a small startup, the right backend framework can save you time and headaches down the road. |

||||

|

||||

Let’s take a look at the following top backend development frameworks at the top of all lists for 2024: |

||||

|

||||

* NextJS |

||||

* Fastify |

||||

* SvelteKit |

||||

* Ruby on Rails |

||||

* Laravel |

||||

* Phoenix |

||||

* Actix |

||||

|

||||

## Criteria for Evaluating The Top Backend Frameworks |

||||

|

||||

How can you determine what “best backend framework” means for you? To answer that question, I’ll define a set of key factors to consider. Let’s break down the most important criteria that will help you make the best choice for your project: |

||||

|

||||

**Performance**: |

||||

|

||||

* A high-performing backend framework processes server-side tasks (e.g., database queries, user sessions, real-time data) quickly and efficiently. |

||||

* Faster processing improves user experience, especially in 2024 when speed is critical. |

||||

|

||||

**Scalability**: |

||||

|

||||

* The framework should handle increased traffic, larger datasets, and feature expansion without issues. |

||||

* It should smoothly scale for both small and large user bases. |

||||

|

||||

**Flexibility**: |

||||

|

||||

* A flexible framework adapts to new business or technical requirements. |

||||

* It should support various project types without locking you into a specific structure. |

||||

|

||||

**Community and Ecosystem**: |

||||

|

||||

* A strong community provides support through tutorials, forums, and third-party tools. |

||||

* A good ecosystem includes useful plugins and integrations for popular services or databases. |

||||

|

||||

**Learning Curve**: |

||||

|

||||

* An easy-to-learn framework boosts productivity and helps you get up to speed quickly. |

||||

* A framework should balance ease of learning with powerful functionality. |

||||

|

||||

**Security**: |

||||

|

||||

* A reliable framework includes built-in security features to protect user data and prevent vulnerabilities. |

||||

* It should help you comply with regulations and address security concerns from the start. |

||||

|

||||

**Future-Proofing**: |

||||

|

||||

* Choose a framework with a history of updates, a clear development roadmap, and a growing community. |

||||

* A future-proof framework ensures long-term support and relevance. |

||||

|

||||

### My go-to backend framework |

||||

|

||||

My favorite backend framework is Next.js because it has the highest scores from the group. |

||||

|

||||

That said, I’ve applied the above criteria to the best backend development frameworks I’m covering below in this guide. This table gives you a snapshot view of how they all compare according to my ratings, and I’ll explain the details further below. |

||||

|

||||

|

||||

|

||||

Of course, Next.js is the best one for me, and that works for me alone. You have to consider your own projects and your own context to understand what the best choice for you would be. |

||||

|

||||

Let’s get into the selection and what their strengths and weaknesses are to help you select the right one for you. |

||||

|

||||

## Top 7 Backend Frameworks in 2024 |

||||

|

||||

### Next.js |

||||

|

||||

|

||||

|

||||

Next.js is a full-stack React framework and one of the most popular backend frameworks in the JavaScript community. Over the years, it has evolved into a robust web development solution that supports static site generation (SSG), server-side rendering (SSR), and even edge computing. Backed by Vercel, it’s now one of the go-to frameworks for modern web development. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Next.js has a wonderful performance thanks to its ability to optimize for both static and dynamic generation. With server-side rendering and support for edge computing, it's built to handle high-performance requirements. Additionally, automatic code splitting ensures only the necessary parts of the app are loaded, reducing load times. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

Next.js is designed to scale easily, from small static websites to large-scale dynamic applications. Its ability to turn backend routes into serverless functions puts it at an unfair advantage over other frameworks. Paired with Vercel’s deployment platform, scaling becomes almost effortless. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Next.js is one of the most flexible frameworks out there. It supports a wide range of use cases, from simple static websites to highly complex full-stack applications. With its API routes feature, developers can create powerful backends, making Next.js suitable for both frontend and backend development in a single framework. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

The Next.js community (just like the JavaScript community in general) is large and quite active, with an ever-growing number of plugins, integrations, and third-party tools. The framework has solid documentation and an active ecosystem, thanks to its close ties to both the React community and Vercel’s developer support. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

For developers already familiar with React, Next.js provides a relatively smooth learning curve. However, for those new to SSR, SSG or even RSC (React Server Components), there’s a bit of a learning curve as you adapt to these concepts (after all, you’re learning React and backend development at the same time). That said, the framework's excellent documentation and active community make it easier to grasp. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

Next.js doesn’t inherently have a wide array of built-in security tools, but it follows secure default practices and can be paired with Vercel’s security optimizations for additional protection. Out of the box, Next.js ensures some level of security against common web threats but will need further configuration depending on the app's complexity. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 7\. Future-Proofing |

||||

|

||||

Backed by Vercel, Next.js has a bright future. Vercel consistently pushes updates, introduces new features, and improves the overall developer experience. Given its adoption and strong support, Next.js is very future-proof, with no signs of slowing down. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### Fastify |

||||

|

||||

|

||||

|

||||

Fastify is a lightweight and fast backend framework for Node.js, often seen as a high-performance alternative to Express.js. It was created with a strong focus on speed, low overhead, and developer-friendly tooling, making it a popular choice for developers building APIs and microservices. Fastify offers a flexible plugin architecture and features like schema-based validation and HTTP/2 support, setting it apart in the Node.js ecosystem. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Fastify shines when it comes to performance. It’s optimized for handling large amounts of requests with low latency, making it one of the fastest Node.js frameworks available. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

With a strong focus on scalability, Fastify is ideal for handling large applications and high-traffic scenarios. Its lightweight nature ensures that you can build scalable services with minimal resource consumption. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Fastify’s [plugin architecture](https://fastify.dev/docs/latest/Reference/Plugins/) is one of its key strengths. This allows developers to easily extend the framework’s capabilities and tailor it to specific use cases, whether you’re building APIs, microservices, or even server-rendered applications. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

While Fastify’s community is not as large as Express.js or Next.js, it is steadily growing. The ecosystem of plugins and tools continues to expand, making it easier to find the right tools and libraries to fit your needs. However, its smaller ecosystem means fewer third-party tools compared to some of the more established frameworks. |

||||

|

||||

⭐ **Rating: 3/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

If you’re coming from Express.js or another Node.js framework, Fastify’s learning curve is minimal. Its API is designed to be familiar and easy to adopt for Node.js developers. While there are some differences in how Fastify handles things like schema validation and plugins, it’s a relatively smooth transition for most developers. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

Fastify incorporates built-in security features such as schema-based validation, which helps prevent vulnerabilities like injection attacks. The framework also supports HTTP/2 out of the box, which provides enhanced security and performance. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 7\. Future-Proofing |

||||

|

||||

Fastify has a strong development roadmap and is [consistently updated](https://github.com/fastify/fastify) with performance improvements and new features. The backing from a growing community and its continued adoption by large-scale applications make Fastify a solid bet for long-term use. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### SvelteKit |

||||

|

||||

|

||||

|

||||

SvelteKit is a full-stack framework built on top of Svelte, a front-end compiler that moves much of the heavy lifting to compile time rather than runtime. SvelteKit was designed to simplify building modern web applications by providing server-side rendering (SSR), static site generation (SSG), and support for client-side routing—all in a performance-optimized package. In other words, it’s an alternative to Next.js. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

SvelteKit leverages Svelte compile-time optimizations, resulting in fast runtime performance. Unlike frameworks that rely heavily on virtual DOM diffing, Svelte compiles components to efficient JavaScript code, which means fewer resources are used during rendering. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

While SvelteKit is excellent for small to medium-sized applications, its scalability for enterprise-level applications is still being tested by the developer community. It is possible to scale SvelteKit for larger applications, especially with the right infrastructure and server setup, but it may not yet have the same level of proven scalability as more mature frameworks like Next.js or Fastify. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

As with most web frameworks, SvelteKit is highly flexible, allowing developers to build everything from static sites to full-stack robust web applications. It provides SSR out of the box, making it easy to handle front-end and back-end logic in a single codebase. Additionally, it supports various deployment environments like serverless functions or traditional servers. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

The SvelteKit community is growing rapidly, and more tools, plugins, and resources are being developed to support it. While the ecosystem isn’t as mature as frameworks like React or Vue, the rate of adoption is promising. The official documentation is well-written, and there’s a growing number of third-party tools, libraries, and guides available for developers to tap into. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

For developers familiar with Svelte, the transition to SvelteKit is smooth and intuitive. However, if you're new to Svelte, there is a moderate learning curve, particularly in understanding Svelte’s reactivity model and SvelteKit's routing and SSR features. Still, the simplicity of Svelte as a framework helps ease the learning process compared to more complex frameworks like React or Angular. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

SvelteKit’s security features are still evolving, with basic protections in place but requiring developers to implement best practices to build really secure web applications. There are no significant built-in security tools like in some larger frameworks, so developers need to be cautious and handle aspects like input validation, cross-site scripting (XSS) protection, and CSRF manually. |

||||

|

||||

⭐ **Rating: 3/5** |

||||

|

||||

#### 7\. Future-Proofing |

||||

|

||||

Svelte’s increasing popularity and SvelteKit’s rapid development signal a bright future for the framework. The growing adoption of Svelte, backed by its simplicity and performance, ensures that SvelteKit will continue to be developed and improved in the coming years. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### Ruby on Rails |

||||

|

||||

|

||||

|

||||

Ruby on Rails (Rails) is a full-stack web development framework written in Ruby, created by David Heinemeier Hansson in 2004\. Rails revolutionized web development by promoting "convention over configuration" and allowing developers to rapidly build web applications with fewer lines of code. It |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Rails performs exceptionally well for typical CRUD (Create, Read, Update, Delete) applications, where database operations are straightforward and heavily optimized within the framework. However, as applications grow in complexity or require real-time features, Rails’ performance can become a challenge. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

Rails is often critiqued for its scalability limitations, but it can scale when combined with proper architecture and best practices. Techniques like database sharding, horizontal scaling, and using background jobs for heavy-lifting tasks can help. Still, it’s not the first choice for developers who anticipate massive scale, as it requires careful planning and optimization to avoid performance bottlenecks. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Rails is a great framework for rapid development, especially for standard web applications, such as e-commerce platforms, blogs, or SaaS products. However, it’s less flexible when it comes to non-standard architectures or unique application needs. It’s designed with conventions in mind, so while those conventions help you move fast, they can become restrictive in more unconventional use cases. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

Next to JavaScript with NPM, Rails has one of the most mature ecosystems in web development, with a huge repository of gems (libraries) that can help speed up development. From user authentication systems to payment gateways, there’s a gem for almost everything, saving developers from reinventing the wheel. The community is also very active, and there are many resources, tutorials, and tools to support developers at every level. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

Rails is known for its easy learning curve, especially for those new to web development. The framework’s focus on convention over configuration means that beginners don’t need to make many decisions and can get a functional app up and running quickly. On top of that, Ruby’s readable syntax also makes it approachable for new devs. |

||||

|

||||

However, as the application grows, mastering the framework’s more advanced concepts and learning how to break through those pre-defined conventions can become a bit of a problem. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

Rails comes with a solid set of built-in security features, including protections against SQL injection, XSS (cross-site scripting), and CSRF (cross-site request forgery). By following Rails' conventions, developers can implement secure practices without much additional work. However, as with any framework, you still need to stay updated on security vulnerabilities and ensure proper coding practices are followed. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### 7\. Future-Proofing |

||||

|

||||

While Rails is still highly relevant and widely used, its growth has slowed down from its initial hype during 2010, and it’s no longer the hot, new framework. That said, it remains a solid choice for many businesses, especially those building content-heavy or e-commerce applications. With an established user base and regular updates, Rails is not going anywhere, but its popularity may continue to wane as newer frameworks gain traction. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

### Laravel |

||||

|

||||

|

||||

|

||||

Laravel is a PHP backend framework that was introduced in 2011 by Taylor Otwell. It has since become one of the most popular frameworks in the PHP ecosystem, known for its elegant syntax, ease of use, and focus on developer experience (known to some as the RoR of PHP). Laravel offers a range of built-in tools and features like routing, authentication, and database management, making it ideal for building full-featured web applications quickly. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Laravel performs well for most typical web applications, especially CRUD operations. However, for larger, more complex applications, performance can be a concern. Using tools like caching, query optimization, and Laravel’s built-in optimization features (such as queue handling and task scheduling) can help boost performance, but some extra work may be required for high-load environments. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

Laravel can scale, but like Rails, it requires careful attention to architecture and infrastructure. By using horizontal scaling techniques, microservices, and services like AWS or Laravel’s Vapor platform, you can build scalable applications. However, Laravel is often seen as better suited for small to medium applications without heavy scaling needs right out of the box. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Laravel is highly flexible, allowing you to build a wide variety of web applications. With built-in features for routing, ORM, middleware, and templating, you can quickly build anything from small websites to enterprise applications. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

Contrary to popular belief (mainly due to a lack of hype around the technology), Laravel has a large, active community and a vast ecosystem of packages and third-party tools. With Laracasts, a popular video tutorial platform, and Laravel.io the community portal for Laravel developers, there are many ways to reach out and learn from others. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

Laravel has a relatively gentle learning curve, especially for developers familiar with PHP. Its focus on simplicity, readable syntax, and built-in features make it easy to pick up for beginners. However, mastering the full list of Laravel’s capabilities and best practices can take some time for more complex projects. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

Just like others, Laravel comes with built-in security features, such as protection against common vulnerabilities like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). The framework adheres to best security practices, making it easier for developers to build secure applications without much extra effort. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### 7\. Future-proofing |

||||

|

||||

Laravel is still highly relevant and continues to grow in popularity (having [recently secured a very substantial](https://laravel-news.com/laravel-raises-57-million-series-a) amount of money). It has a regular release schedule and a strong commitment to maintaining backward compatibility. With its consistent updates, active community, and growing ecosystem, Laravel is a solid choice for long-term projects. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

### Phoenix |

||||

|

||||

|

||||

|

||||

#### **Overview and History** |

||||

|

||||

Phoenix is a backend framework written in Elixir, designed to create high-performance, scalable web applications. It leverages Elixir's concurrency and fault-tolerant nature (inherited from the Erlang ecosystem) to build real-time, distributed systems. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Phoenix is known for its outstanding performance, particularly in handling large numbers of simultaneous connections. Thanks to Elixir’s concurrency model and lightweight processes, Phoenix can serve thousands of requests with minimal resource consumption. Real-time applications benefit especially from Phoenix’s built-in WebSockets and its LiveView feature for updating UIs in real-time without the need for JavaScript-heavy frameworks. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

Scalability is one of Phoenix’s biggest features. Because it runs on the Erlang VM, which was designed for distributed, fault-tolerant systems, Phoenix can scale horizontally and vertically with ease. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Phoenix is highly flexible, supporting everything from traditional web applications to real-time applications like chat apps and live updates. Its integration with Elixir’s functional programming paradigm and the BEAM virtual machine allows developers to build fault-tolerant, systems. The flexibility extends to how you can structure applications, scale components, and handle real-time events seamlessly. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

Phoenix has a growing and passionate community, but it’s still smaller compared to more established frameworks like Rails or Laravel. However, it benefits from Elixir’s ecosystem, including libraries for testing, real-time applications, and database management. The community is supportive, and the framework’s documentation is detailed and developer-friendly, making it easy to get started. |

||||

|

||||

⭐ **Rating: 2.5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

Phoenix, being built on Elixir, has a steeper learning curve than frameworks based on more common languages like JavaScript or PHP. Elixir’s functional programming model, while powerful, can be challenging for developers unfamiliar with the paradigm. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

As with most of the popular backend frameworks, Phoenix comes with strong built-in security features, including protections against common vulnerabilities like XSS, SQL injection, and CSRF. Additionally, because Elixir processes are isolated, Phoenix applications are resilient to many types of attacks. While some manual work is still required to ensure security, Phoenix adheres to best practices and provides tools to help developers write secure code. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### 7\. Future-Proofing |

||||

|

||||

Phoenix has a bright future thanks to its solid foundation in the Erlang/Elixir ecosystem, which is known for long-term reliability and support. While the framework might be technologically sound and future-proof, the key to having Elixir in the future will depend on the growth of its popularity. If Elixir’s community keeps growing, we’ll be able to enjoy the framework for a long time. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### Actix |

||||

|

||||

|

||||

|

||||

Actix is a powerful, high-performance web framework written in Rust. It’s based on the actor model, which is ideal for building concurrent, distributed systems. Actix is known for its incredible performance and memory safety, thanks to Rust’s strict compile-time guarantees. |

||||

|

||||

#### 1\. Performance |

||||

|

||||

Actix is one of the fastest web frameworks available, thanks to Rust’s system-level performance and Actix’s use of asynchronous programming. As it happens with JavaScript-based frameworks, it can handle a large number of requests with minimal overhead, making it ideal for high-performance, real-time applications. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 2\. Scalability |

||||

|

||||

The actor model makes Actix the best at handling concurrent tasks and scaling across multiple threads or servers. Rust’s memory safety model and Actix’s architecture make it highly efficient in resource usage, meaning applications can scale well without excessive overhead. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### 3\. Flexibility |

||||

|

||||

Actix is flexible but requires a deeper understanding of Rust’s ownership and concurrency model to fully take advantage of it. It’s great for building both small, fast APIs and large, service architectures. While Actix is powerful, it’s less forgiving compared to other popular backend frameworks like Node.js or Python’s Flask, where rapid prototyping is easier. |

||||

|

||||

⭐ **Rating: 3/5** |

||||

|

||||

#### 4\. Community and Ecosystem |

||||

|

||||

Rust’s ecosystem, while growing, is still smaller compared to more established languages like JavaScript or Python. However, the Rust community is highly engaged, and support is steadily improving. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### 5\. Learning Curve |

||||

|

||||

Actix inherits Rust’s learning curve, which can be steep for developers new to systems programming or Rust’s strict memory management rules. However, for developers already familiar with Rust, Actix can be a great gateway into web development. |

||||

|

||||

⭐ **Rating: 2/5** |

||||

|

||||

#### 6\. Security |

||||

|

||||

Rust is known for its memory safety and security guarantees, and Actix benefits from these inherent strengths. Rust’s compile-time checks prevent common security vulnerabilities like null pointer dereferencing, buffer overflows, and data races. While these features tackle one side of the security ecosystem, more relevant ones like web-related vulnerabilities are not tackled by the framework. |

||||

|

||||

⭐ **Rating: 2.5/5** |

||||

|

||||

#### **7\. Future-Proofing** |

||||

|

||||

Rust’s growing popularity and adoption, especially in performance-critical areas, ensure that Actix has a strong future. While Actix’s ecosystem is still developing, the framework is regularly maintained and benefits from Rust’s long-term stability. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

## Conclusion |

||||

|

||||

Choosing the right backend framework is a critical decision that can shape the future of your project. In 2024, developers have more powerful options than ever, from popular backend frameworks like Ruby on Rails, Laravel or Next.js to high-performance focused, like Fastify, SvelteKit, Phoenix, and Actix. Each framework has its own strengths, making it essential to consider factors such as performance, scalability, flexibility, and the learning curve to ensure you pick the right tool for the job. |

||||

|

||||

Ultimately, there’s no proverbial silver bullet that solves all your problems. Your choice will depend on your project’s needs, your team's expertise, and the long-term goals of your application. |

||||

|

||||

So take your time, weigh the pros and cons, and pick the framework that aligns best with your vision for the future. |

||||

@ -0,0 +1,186 @@ |

||||

--- |

||||

title: '11 DevOps Principles and Practices to Master: Pro Advice' |

||||

description: 'Elevate your game by understanding this set of key DevOps principles and practices. Gain pro insights for a more efficient, collaborative workflow!' |

||||

authorId: fernando |

||||

excludedBySlug: '/devops/principles' |

||||

seo: |

||||

title: '11 DevOps Principles and Practices to Master: Pro Advice' |

||||

description: 'Elevate your game by understanding this set of key DevOps principles and practices. Gain pro insights for a more efficient, collaborative workflow!' |

||||

ogImageUrl: 'https://assets.roadmap.sh/guest/devops-engineer-skills-tlace.jpg' |

||||

isNew: true |

||||

type: 'textual' |

||||

date: 2024-09-24 |

||||

sitemap: |

||||

priority: 0.7 |

||||

changefreq: 'weekly' |

||||

tags: |

||||

- 'guide' |

||||

- 'textual-guide' |

||||

- 'guide-sitemap' |

||||

--- |

||||

|

||||

|

||||

|

||||

If you truly want to understand what makes DevOps so effective, it’s essential to know and master its core principles. |

||||

|

||||

DevOps is more than just a collaboration between development and operations teams; it's built on fundamental principles that simplify software delivery. |

||||

|

||||

In this guide, I’m going to dive deep into the core principles and practices that make the DevOps practice “tick.” If you’re a DevOps engineer or you want to become one, these are the DevOps principles you should master. |

||||

|

||||

I’ll explain the following principles in detail: |

||||

|

||||

1. Understanding the culture you want to join |

||||

2. CI/CD |

||||

3. Knowing how to use infrastructure as code tools. |

||||

4. Understanding containerization. |

||||

5. Monitoring & observability. |

||||

6. Security |

||||

7. Reducing the toil and technical debt. |

||||

8. Adopting GitOps. |

||||

9. Understanding that you’ll be learning & improving constantly. |

||||

10. Understanding basic programming concepts. |

||||

11. Embracing automation. |

||||

|

||||

## 1\. Understanding DevOps Culture |

||||

|

||||

[DevOps](https://roadmap.sh/devops) culture is the foundation for all DevOps principles. At its core, it's about fostering a collaborative environment where development and operations teams work together seamlessly. In traditional software development, developers focus on writing code while the operations team is tasked with deploying and maintaining it. This division often leads to misunderstandings and delays. |

||||

|

||||

Instead of operating in silos, these teams, when they follow the DevOps culture, end up sharing a common goal: delivering high-quality software efficiently. This cultural shift reduces the "us versus them" mentality that many organizations suffer, fostering cooperation instead of blame. |

||||

|

||||

DevOps culture encourages development and operations to collaborate throughout the software development lifecycle (SDLC). By aligning their goals and encouraging open communication, both teams can work together to improve the process of development, ultimately resulting in faster and more reliable software delivery. |

||||

|

||||

Key components of this culture include shared responsibility, transparency, and a commitment to continuous improvement. |

||||

|

||||

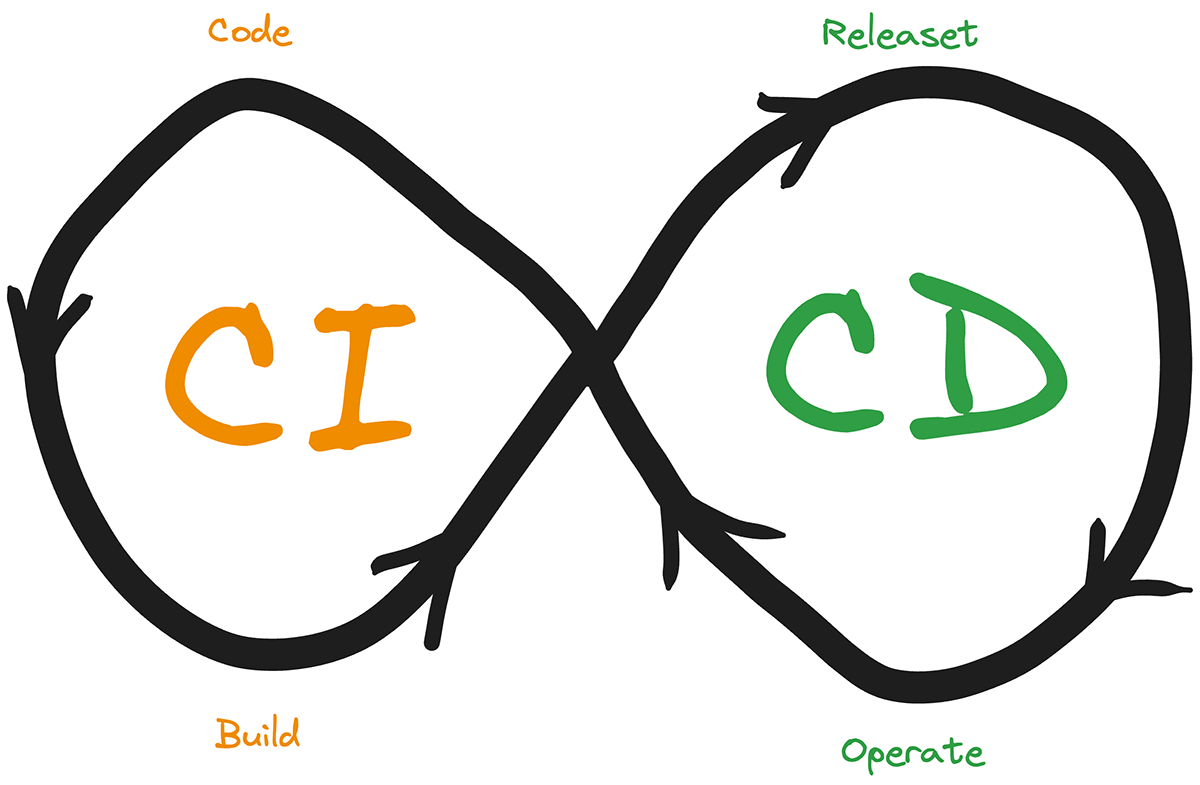

## 2\. Continuous Integration and Continuous Deployment (CI/CD) |

||||

|

||||

|

||||

|

||||

Continuous Integration (CI) and Continuous Deployment (CD) are central to DevOps principles. CI is the practice of frequently integrating code changes into a shared repository, ensuring that new code is automatically tested and validated. This practice helps catch bugs early, reducing the risk of introducing issues into the main codebase. CI allows devs and ops teams to work more efficiently, improving the overall quality of the software. |

||||

|

||||

Continuous Deployment, on the other hand, takes things a step further by automatically deploying code changes to production once they pass the CI tests. This ensures that new features and bug fixes are delivered to users as quickly as possible. Together, CI and CD form a pipeline that streamlines the software development lifecycle, from code commit to production deployment in seconds (or in some cases, minutes). |

||||

|

||||

Implementing CI/CD involves using various tools and practices. Jenkins, GitLab CI, CircleCI, and Travis CI are popular options for setting up CI pipelines, while tools like Spinnaker and Argo CD help with CD. |

||||

|

||||

## 3\. Infrastructure as Code (IaC) |

||||

|

||||

|

||||

|

||||

Infrastructure as Code (IaC) is a game-changer in the DevOps world. Traditionally, provisioning infrastructure involved manual setup and configuration, which was time-consuming and, of course, prone to human error. IaC changes the game by treating infrastructure the same way we treat application code: as a set of scripts or configurations that can be version-controlled, tested, and automated. |

||||

|

||||

Through IaC, DevOps teams can ensure consistency and repeatability across different environments. It eliminates the "works on my machine" problem by providing a standardized environment for software to run on, whether it's on a developer's local machine, in a staging environment, or in production. |

||||

|

||||

Over the years, IaC tools have evolved quite a lot. At the start of it all, players like Chef and Puppet introduced the concept of configuration management, allowing you to define the desired state of your systems. Ansible took that one step further with its agentless architecture, making it easier to manage infrastructure at scale. Terraform took IaC to the next level by providing a tool-agnostic way to provision resources across multiple cloud providers, making it a favorite among DevOps engineers. |

||||

|

||||

## 4\. Containerization |

||||

|

||||

|

||||

|

||||

Containerization is a core practice and one of the main devops principles to constantly apply. Containers provide a lightweight, portable way to package software along with its dependencies, ensuring that it runs consistently across different environments. Containers share the host system's kernel, making them more efficient and faster to start up than virtual machines. |

||||

|

||||

These “containers” have been playing a key role in solving one of the age-old problems in software development: environment inconsistencies. By encapsulating an application and its dependencies into a container, you can ensure that it runs the same way on a developer's laptop as it does in production. This consistency simplifies the development process and reduces the risk of environment-related problems. |

||||

|

||||

In this space, Docker is the most popular tool for creating and managing containers (although not the only one), offering a simple way to build, package, and distribute containerized applications. Kubernetes takes containerization to the next level by providing a platform for orchestrating containers at scale. With Kubernetes, you can automate the deployment, scaling, and management of containerized applications, making it easier to manage complex, multi-container applications. |

||||

|

||||

While there is no excuse to not use DevOps at this stage in any software project, some of the biggest benefits of using containers in the DevOps lifecycle are: consistency, scalability, and portability. |

||||

|

||||

In other words, they make it easier to move applications between different environments. They also enable more efficient use of resources, as multiple containers can run on the same host without the overhead of a full virtual machine. |

||||

|

||||

## 5\. Monitoring and Observability |

||||

|

||||

|

||||

|

||||

Monitoring and observability are essential components of the DevOps practice and key principles for any DevOps team. While monitoring focuses on tracking the health and performance of your systems, observability goes a step further by providing insights into the internal state of your applications based on the data they produce. Together, they enable DevOps teams to detect and troubleshoot issues quickly, ensuring that applications run smoothly. |

||||

|

||||

Continuous monitoring involves constantly tracking key metrics such as CPU usage, memory consumption, response times, and error rates. Tools like Prometheus, Grafana, and the ELK Stack (Elastic, Logstash, Kibana) are popular choices for collecting and visualizing this data. All public cloud providers also have their own solutions, in some cases even based on the open-source versions mentioned before. |

||||

|

||||

Whatever the tool of your choice is, they all provide real-time insights into the performance of your applications and infrastructure, helping you identify potential issues before they impact users. |

||||

|

||||

Now the practice of observability extends beyond monitoring by providing a deeper understanding of how your systems are behaving. It involves collecting and analyzing logs, metrics and traces to gain insights into the root cause of issues. OpenTelemetry, for instance, is an emerging standard for collecting telemetry data, offering a unified way to instrument, collect, and export data for analysis. This standardization makes it easier to integrate observability into your DevOps practices, regardless of the tools you're using. |

||||

|

||||

## 6\. Security in DevOps |

||||

|

||||

|

||||

|

||||

Security is a critical aspect of the DevOps lifecycle, and it's something that needs to be integrated from the very beginning of any project expected to see the light of production at one point. |

||||

|

||||

DevSecOps is the practice of embedding security into the DevOps pipeline, ensuring that security measures are applied consistently throughout the software development lifecycle (reviewing code for vulnerabilities, checking IaC scripts, etc). Through this practice, DevOps helps catch vulnerabilities early and greatly reduce the risk of security breaches in production. |

||||

|

||||

Sadly, in many companies and teams that follow more traditional practices, security tends to be an afterthought, gaining importance only after the code is written and deployed. This approach can lead to costly and time-consuming fixes. DevSecOps, on the other hand, integrates security into every stage of the development and operations process, from code development to deployment. In the end, this helps security teams to automate security testing, identify vulnerabilities early, and enforce security policies consistently. All without having to read a single line of code themselves. |

||||

|

||||

In this space, tools like Snyk, Aqua Security, and HashiCorp Vault are king and they can help you integrate security into your DevOps workflows. |

||||

|

||||

## 7\. Reducing Toil and Technical Debt |

||||

|

||||

Toil and technical debt are two of the biggest productivity killers in software development. Toil refers to the repetitive, manual tasks that don't add direct value to the product, while technical debt is the accumulation of shortcuts and workarounds that make the codebase harder to maintain over time. Both can slow down your development workflow and make it more challenging to deliver new features. |

||||

|

||||

And because of that, one of the big and important DevOps principles is to aim to reduce both. Yes, DevOps teams can also help reduce technical debt. |

||||

|

||||

Reducing toil involves automating repetitive tasks to free up time for more valuable work. Tools like Ansible, Chef, and Puppet can help automate infrastructure management, while CI/CD pipelines can automate the build, test, and deployment processes. In the end, less manual work translates to reducing the chances of errors and giving team members the chance to focus on more interesting and strategic tasks. |

||||

|

||||

Technical debt, on the other hand, requires a proactive approach to address. It's about finding the right balance between delivering new features and maintaining the quality of the codebase. Regularly refactoring code, improving documentation, and addressing known issues can help keep technical debt in check. Of course, this also needs to be balanced with their ability to deliver new features and move the product forward. |

||||

|

||||

## 8\. GitOps: The Future of Deployment |

||||

|

||||

|

||||

|

||||

GitOps is a new practice that takes the principles of Git and applies them to operations. It's about using Git as the single source of truth for your infrastructure and application configurations. By storing everything in Git, you can use version control to manage changes, track history, and facilitate collaboration among development and operations teams. |

||||

|

||||

You essentially version your entire infrastructure with the same tools you version your code. |

||||

|

||||

In other words, all changes to the infrastructure and applications are made through pull requests to the Git repository. Once a change is merged, an automated process applies the change to the target environment. This approach provides a consistent, auditable, and repeatable way to manage deployments, making it easier to maintain the desired state of your systems. |

||||

|

||||

Through GitOps, teams can manage deployments and gain the following benefits: improved visibility, version control, and traceability. |

||||

|

||||

This methodology aligns well with the DevOps principles of automation, consistency, and collaboration, making it easier to manage complex deployments at scale. |

||||

|

||||

Key tools for implementing GitOps include Argo CD and Flux. These tools help you automate the deployment process by monitoring the Git repository for changes and applying them to the cluster. |

||||

|

||||

## 9\. Continuous Learning and Improvement |

||||

|

||||

|

||||

|

||||

In general the world of tech is constantly evolving and changing and continuous learning and improvement are essential practices for staying ahead and relevant. |

||||

|

||||

That said, in the DevOps landscape change is also a constant, with new tools, practices, and technologies emerging all the time. If you think about it, before 2006 we didn’t even have containers. |

||||

|

||||

So to keep up, DevOps engineers and teams need to be committed to learning and improving continuously. |

||||

|

||||

Encouraging a culture of continuous learning within your team can help keep everyone up-to-date with the latest DevOps trends and tools. This can include participating in conferences, attending workshops, and enrolling in online courses. Reading books like "The Phoenix Project," "The Unicorn Project," and "The DevOps Handbook" can provide valuable insights and inspiration. |

||||

|

||||

If you’re not into books, then websites like [12factor.net](http://12factor.net), [OpenGitOps.dev](http://OpenGitOps.dev), and [CNCF.](http://CNCF.)io are also great resources for staying current with industry best practices. |

||||

|

||||

Continuous improvement goes hand-in-hand with continuous learning. It's about regularly reviewing and refining your processes, identifying areas for improvement after failing and experimenting with new approaches. This iterative approach helps you optimize the development process, improve collaboration between devs and operations, and deliver better software. |

||||

|

||||

## 10\. Understanding Programming Concepts |

||||

|

||||

|

||||

|

||||

While not every DevOps engineer needs to be a full-fledged developer, having a solid understanding of programming concepts is key to success in the professional world. |

||||

|

||||

A good grasp of programming helps bridge the gap between development and operations, making it easier to collaborate and understand each other's needs. Which, if you think about it, is literally the core principle of the DevOps practice. |

||||

|

||||

Understanding programming translates to being able to write scripts in languages like Bash, Python, or PowerShell to automate tasks, manage infrastructure, and interact with APIs. This can range from simple tasks like automating server setup to more complex operations like orchestrating CI/CD pipelines. |

||||

|

||||

Understanding programming concepts also enables you to better manage the software development lifecycle. It helps you understand how code changes affect system performance, security, and stability. This insight allows you to make more informed decisions when designing and implementing infrastructure and deployment processes. |

||||

|

||||

## 11\. Automation in DevOps |

||||

|

||||

Automation is at the heart of DevOps principles. It's about automating repetitive and manual tasks to accelerate processes, reduce errors, and free up time for more strategic work. We partially covered this concept before as part of the toil reduction principle. |

||||

|

||||

However, it’s important to explain that automation not only involves code builds and tests, it also includes infrastructure provisioning and application deployment. In other words, automation plays a key role in every stage of the DevOps lifecycle. |

||||

|

||||

The whole point of automation is to accelerate processes. It enables faster, more consistent, and more reliable software delivery. By automating tasks like code integration, testing, and deployment, you can reduce the time it takes to get new features into production and minimize the risk of human error. |

||||

|

||||

There are many areas in the DevOps lifecycle where automation can be applied, in fact, the challenge would be to find areas where it wouldn’t make sense to apply it. These include CI/CD pipelines, infrastructure provisioning, configuration management, monitoring, and security testing. In this area, some of the most popular DevOps tools are Jenkins, Ansible, Terraform, and Selenium. They all provide the building blocks for automating these tasks, allowing you to create a seamless and efficient development workflow that everyone enjoys. |

||||

|

||||

If you’re looking to start implementing automation in your DevOps workflow, consider starting small and gradually expanding automation efforts, using version control for automation scripts (Git is a great option), and continuously monitoring and refining automated processes. |

||||

|

||||

It's important to find a balance between automation and human intervention, ensuring that automation enhances the development workflow without introducing unnecessary complexity. |

||||

|

||||

## Conclusion |

||||

|

||||

And there you have it—the core principles and practices of DevOps in a nutshell. By mastering them, you'll be well on your way to becoming a great [DevOps engineer](https://roadmap.sh/devops/devops-engineer). |

||||

|

||||

Whether you're just starting out or looking to level up your DevOps game, there's always something new to learn and explore. So keep experimenting, keep learning, and most importantly, keep having fun\! |

||||

|

||||

After all, DevOps isn't just about making systems run smoothly—it's about building a culture that encourages innovation, collaboration, and growth. As you dive deeper into the DevOps practice, you'll not only become more skilled but also contribute to creating better software and more agile teams. |

||||

@ -0,0 +1,376 @@ |

||||

--- |

||||

title: 'Top 7 Frontend Frameworks to Use in 2024: Pro Advice' |

||||

description: 'Get expert advice on frontend frameworks for 2024. Elevate your web development process with these top picks.' |

||||

authorId: fernando |

||||

excludedBySlug: '/frontend/frameworks' |

||||

seo: |

||||

title: 'Top 7 Frontend Frameworks to Use in 2024: Pro Advice' |

||||

description: 'Get expert advice on frontend frameworks for 2024. Elevate your web development process with these top picks.' |

||||

ogImageUrl: 'https://assets.roadmap.sh/guest/top-frontend-frameworks-wmqwc.jpg' |

||||

isNew: true |

||||

type: 'textual' |

||||

date: 2024-09-26 |

||||

sitemap: |

||||

priority: 0.7 |

||||

changefreq: 'weekly' |

||||

tags: |

||||

- 'guide' |

||||

- 'textual-guide' |

||||

- 'guide-sitemap' |

||||

--- |

||||

|

||||

|

||||

|

||||

With the growing complexity of web applications, selecting the right frontend framework is more important than ever. Your choice will impact performance, scalability, and development speed. Not to mention the future-proofing of your application. |

||||

|

||||

In 2024, web development is increasingly about building fast, scalable, and highly interactive user interfaces. Frontend frameworks now need to support real-time interactions, handle large-scale data, and provide excellent developer experiences by simplifying the web development process. |

||||

|

||||

Picking the right frontend framework isn't just about what's popular—it's about finding the tool that fits your project’s needs, whether you’re building a small static site or a large, complex application. |

||||

|

||||

The top frontend frameworks for web development that I’ll cover as part of this article are: |

||||

|

||||

* React |

||||

* VueJS |

||||

* Angular |

||||

* Svelte |

||||

* Solid.js |

||||

* Qwik |

||||

* Astro |

||||

|

||||

## Criteria for Evaluating Frontend Frameworks |

||||

|

||||

Finding what the “best frontend framework” looks like is not easy. In fact, it’s impossible without the particular characteristics of your project, your team, and all other surrounding details. They will all inform your final decision. |

||||

|

||||

To help in that process, I’ve defined our own set of key indicators that will give you an idea of how we’re measuring the value of each of the leading [frontend development](https://roadmap.sh/frontend) frameworks covered in this article. |

||||

|

||||

1. **Performance:** How well does the frontend framework handle real-world scenarios, including page load times, rendering speed, and efficient resource use? |

||||

2. **Popularity and Community Support:** Is there a large community around the framework? How easy is it to find tutorials, forums, and third-party tools? |

||||

3. **Learning Curve:** Is the framework easy to learn for new developers, or does it require mastering complex patterns and paradigms? |

||||

4. **Ecosystem and Extensibility:** Does the framework offer a robust ecosystem of libraries, plugins, and tooling to extend its functionality? |

||||

5. **Scalability and Flexibility:** Can the framework handle both small and large projects? Is it flexible enough to support different project types, from single-page applications (SPAs) to complex enterprise solutions? |

||||

6. **Future-Proofing:** Is the framework actively maintained and evolving? Will it remain relevant in the next few years, based on trends and support? |

||||

|

||||

### My go-to frontend framework of choice |

||||

|

||||

My go-to framework is React because it has the highest ecosystem score and is one of the most future-proofed ones. |

||||

|

||||

I’ve applied the above criteria to the best frontend development frameworks I’m covering below in this guide. This table gives you a snapshot view of how they all compare according to my ratings, and I’ll explain the details further below. |

||||

|

||||

|

||||

|

||||

Of course, the choice of React is mine, and mine alone. You have to consider your own projects and your own context to understand what the best choice for you would be. |

||||

|

||||

Let’s get into the selection and what their strengths and weaknesses are to help you select the right one for you. |

||||

|

||||

## Top 7 Frontend Development Frameworks in 2024 |

||||

|

||||

### React |

||||

|

||||

|

||||

|

||||

React was created by Facebook in 2013 and has since become one of the most popular frontend frameworks (though technically a library). Initially developed to solve the challenges of building dynamic and complex user interfaces for Facebook’s apps, React introduced the revolutionary concept of the virtual DOM (Document Object Model), which allowed developers to efficiently update only the parts of the UI that changed instead of re-rendering the entire page. |

||||

|

||||

#### Performance |

||||

|

||||

React uses a virtual DOM (the Virtual Document Object Model) to optimize performance by minimizing the number of direct manipulations to the actual DOM. This allows React to efficiently update only the components that need to change, rather than re-rendering the entire page. While React is fast, performance can be impacted in large applications if not managed carefully, especially with unnecessary re-renders or poorly optimized state management (two concepts that have created a lot of literature around them, and yet, most developers still get wrong). |

||||

|

||||

**⭐ Rating: 4/5** |

||||

|

||||

#### Popularity and Community Support |

||||

|

||||

React is one of the most popular frontend frameworks worldwide, with widespread adoption in both small and large-scale applications. Its massive community means there's a wealth of tutorials, libraries, and third-party tools available. With strong backing from Meta and continuous contributions from developers globally, React has one of the richest ecosystems and the largest support networks. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### Learning Curve |

||||

|

||||

React has a moderate learning curve. It’s relatively easy to get started with, especially if you’re familiar with JavaScript, but understanding concepts like JSX and hooks can take some time (especially if you throw in the relatively new server components). Once you grasp the basics, React becomes easier to work with, but mastering advanced patterns and state management solutions can add complexity. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### Ecosystem and Extensibility |

||||

|

||||

React has one of the most mature and extensive ecosystems in the frontend space. With a vast selection of libraries, tools, and plugins, React can be extended to meet virtually any development need. Key libraries like React Router (for routing) and Redux (for state management) are widely adopted, and there are countless third-party components available. React's ecosystem is one of its greatest strengths, offering flexibility and extensibility for all kinds of projects. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### Scalability and Flexibility |

||||

|

||||

React is highly flexible and can scale to meet the needs of both small and large applications. Its component-based architecture allows for modular development, making it easy to manage complex UIs. React is adaptable to various types of projects, from simple SPAs to large, enterprise-level applications. However, managing state in larger applications can become challenging, often requiring the use of external tools like Redux or Context API for better scalability. |

||||

|

||||

**⭐ Rating: 4.5/5** |

||||

|

||||

#### Future-Proofing |

||||

|

||||

React remains one of the most future-proof frameworks, with continuous updates and strong backing from Meta (Facebook). Its widespread adoption ensures that it will be well-supported for years to come. The ecosystem is mature, but React is constantly evolving with features like concurrent rendering and server-side components. The size of the community and corporate support make React a safe bet for long-term projects. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### Vue.js |

||||

|

||||

###  |

||||

|

||||

Vue.js was developed in 2014 by Evan You, who had previously worked on AngularJS at Google. His goal was to create a framework that combined the best parts of Angular’s templating system with the simplicity and flexibility of modern JavaScript libraries like React. Vue is known for its progressive nature, which means developers can incrementally adopt its features without having to completely rewrite an existing project. |

||||

|

||||

#### Performance |

||||

|

||||

Vue’s reactivity system provides a highly efficient way to track changes to data and update the DOM only when necessary. Its virtual DOM implementation is lightweight and fast, making Vue a strong performer for both small and large applications. Vue 3’s Composition API has further optimized performance by enabling more granular control over component updates. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Popularity and Community Support: |

||||

|

||||

Vue.js has grown significantly in popularity, especially in regions like China and Europe, and is widely adopted by startups and smaller companies. Although it doesn't have the corporate backing of React or Angular, its community is passionate, and the framework enjoys strong support from individual contributors. Vue’s ecosystem is robust, with many official libraries and third-party plugins, making it a favorite among developers looking for a balance of simplicity and power. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Learning Curve |

||||

|

||||

Vue’s syntax is clean and straightforward, with a structure that is easy to understand even for those new to frontend frameworks. Features like two-way data binding and directives are intuitive, making Vue much easier to pick up compared to React or Angular. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Ecosystem and Extensibility |

||||

|

||||

Vue has a rich and growing ecosystem, with many official libraries like Vue Router, Vuex (for state management), and Vue CLI (for project setup). Additionally, its ecosystem includes many high-quality third-party plugins that make it easy to extend Vue applications. While not as large as React’s, Vue’s ecosystem is well-curated and highly effective, making it both powerful and developer-friendly. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Scalability and Flexibility |

||||

|

||||

Vue is extremely flexible and scalable. It is designed to be incrementally adoptable, which means you can use it in small parts of a project or as the foundation for a large-scale application. Vue’s core libraries, along with tools like Vuex, make it highly scalable. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Future-Proofing |

||||

|

||||

Vue is actively maintained and supported by a strong open-source community. Its development pace is steady. While it doesn’t have the same level of corporate backing as React or Angular, its growing popularity and enthusiastic community ensure its longevity. Vue is a solid choice for long-lasting projects. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

### Angular |

||||

|

||||

|

||||

|

||||

Angular was first introduced by Google in 2010 as AngularJS, a framework that revolutionized web development by introducing two-way data binding and dependency injection. However, AngularJS eventually became difficult to maintain as applications grew more complex, leading Google to rewrite the framework from the ground up in 2016 with the release of Angular 2 (commonly referred to simply as "Angular"). |

||||

|

||||

#### Performance |

||||

|

||||

Angular offers solid performance, especially in large enterprise applications. It uses a change detection mechanism combined with the Ahead-of-Time (AOT) compiler to optimize performance by compiling templates into JavaScript code before the browser runs them. The built-in optimizations are robust, but Angular’s size and complexity can lead to performance overhead if not managed correctly. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### Popularity and Community Support |

||||

|

||||

Angular is backed by Google and is a popular choice for enterprise-level applications, especially in larger organizations. Its community is active, and Google’s long-term support ensures regular updates and improvements. Angular has a strong presence in corporate environments, and its ecosystem includes official tooling and libraries. However, it is less commonly used by smaller teams and individual developers compared to React and Vue. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Learning Curve |

||||

|

||||

Angular has a steep learning curve due to its complexity and reliance on TypeScript. New web developers may find it challenging to grasp Angular’s concepts, such as dependency injection, decorators, and modules. The comprehensive nature of Angular also means there’s a lot to learn before you can be fully productive, but for experienced developers working on large-scale applications, the structure and tooling can be highly beneficial. |

||||

|

||||

⭐ **Rating: 3/5** |

||||

|

||||

#### Ecosystem and Extensibility |

||||

|

||||

Angular’s ecosystem is comprehensive and fully integrated, offering everything developers need right out of the box. Angular includes official libraries for routing, HTTP client, forms, and more, all provided and maintained by Google. The Angular CLI is a robust tool for managing projects. However, Angular's strict architecture means less flexibility when integrating with external libraries compared to React or Vue, though the ecosystem is extensive. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Scalability and Flexibility |

||||

|

||||

Angular is built with scalability in mind, making it ideal for large, complex applications. Its strict structure and reliance on TypeScript make it a great fit for projects that require clear architecture and maintainability over time. Angular’s modularity and out-of-the-box features like dependency injection and lazy loading enable it to handle enterprise-level web applications with multiple teams. However, its strictness can reduce flexibility for smaller, less complex projects. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### Future-Proofing |

||||

|

||||

Angular has a clear roadmap and long-term support, making it one of the most future-proof frameworks, especially for enterprise applications. Google’s regular updates ensure that Angular remains competitive in the evolving frontend ecosystem. Its TypeScript foundation, strong architecture, and large-scale adoption make it a reliable option for projects with long lifecycles. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

### Svelte |

||||

|

||||

|

||||

|

||||

Svelte is a relatively new entrant in the frontend landscape, created by Rich Harris in 2016\. Unlike traditional frameworks like React and Vue, which do much of their work in the browser, Svelte takes a different approach. It shifts most of the work to compile time, meaning that the framework compiles the application code into optimized vanilla JavaScript during the build process, resulting in highly efficient and fast-running code. |

||||

|

||||

#### Performance |

||||

|

||||

Svelte takes a unique approach to performance by compiling components into highly optimized vanilla JavaScript at build time, removing the need for a virtual DOM entirely. This leads to very fast runtime performance and smaller bundle sizes, as only the necessary code is shipped to the browser. Svelte excels in small, fast-loading applications, making it one of the fastest frontend frameworks available. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### Popularity and Community Support |

||||

|

||||

Svelte has seen rapid growth in popularity (partially due to its novel approach). While its community is smaller compared to React, Vue, or Angular, it’s highly engaged and growing steadily. Svelte has fewer third-party libraries and tools, but the community is working hard to expand its ecosystem. It's particularly popular for smaller projects and developers who want a minimalistic framework. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### Learning Curve |

||||

|

||||

Svelte is relatively easy to learn, especially for web developers familiar with modern JavaScript. Its component-based structure is intuitive, and there’s no need to learn a virtual DOM or complex state management patterns. The absence of a virtual DOM and the simplicity of Svelte’s syntax make it one of the easiest frontend frameworks to pick up. |

||||

|

||||

⭐ **Rating: 4.5/5** |

||||

|

||||

#### Ecosystem and Extensibility |

||||

|

||||

Svelte’s ecosystem is still maturing compared to more established frameworks. While it lacks the extensive third-party library support of React or Vue, Svelte’s core tools like SvelteKit (for building full-stack applications) provide much of what is needed for most use cases. That said, the growing community is actively contributing to expanding the ecosystem and its extensive documentation. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### Scalability and Flexibility |

||||

|

||||

Svelte is highly flexible and performs well in small to medium-sized projects. It’s great at creating fast, lightweight applications with minimal boilerplate. While Svelte’s compile-time approach leads to excellent performance, the truth is Svelte is still too new and untested, so its scalability for very large projects or teams is still to be determined. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

#### Future-Proofing |

||||

|

||||

Svelte is gaining momentum as a modern, high-performance framework, and its unique approach has attracted a lot of attention. While the community is still smaller than that of React or Vue, it is growing rapidly, and the introduction of tools like SvelteKit further enhances its long-term viability. Svelte’s focus on simplicity and performance means it has the potential to become a significant player, but it's still early in terms of large-scale enterprise adoption. |

||||

|

||||

⭐ **Rating: 4/5** |

||||

|

||||

### Solid.js |

||||

|

||||

|

||||

|

||||

Solid.js is a more recent addition to the frontend ecosystem, developed by Ryan Carniato in 2018\. Inspired by React’s declarative style, Solid.js seeks to offer similar features but with even better performance by using a fine-grained reactivity system. Unlike React, which uses a virtual DOM, Solid compiles its reactive components down to fine-grained, efficient updates, reducing overhead and increasing speed. |

||||

|

||||

#### Performance |

||||

|

||||

Solid.js is designed for performance, using a fine-grained reactivity system to ensure that only the necessary parts of the DOM are updated. This eliminates the need for a virtual DOM, resulting in highly efficient rendering and state updates. Solid’s performance is often considered one of the best in the frontend space, especially for applications with complex state management. |

||||

|

||||

⭐ **Rating: 5/5** |

||||

|

||||

#### Popularity and Community Support |

||||

|

||||

Solid.js is still a relatively new player in the frontend space, but it is gaining traction due to its high performance and fine-grained reactivity model. The community is smaller compared to other frameworks but highly enthusiastic, and interest in Solid.js is growing quickly. While it has fewer resources and libraries available compared to larger frameworks, it is gradually building a strong support network. |

||||

|

||||

⭐ **Rating: 3.5/5** |

||||

|

||||

#### Learning Curve |

||||

|

||||